Jing Xu from our Research and Thought Leadership team explores two examples of how Artificial Intelligence supports Cambridge Assessment English in generating over half a billion marks every single year.

In a world of instantaneous digital communication, next day delivery and personalisation, our learners can find it hard to understand the time lag between sitting an exam and receiving their results. Also, results need to go beyond pass/fail grades because, as part of our commitment to learners, we need to provide detailed

diagnostic feedback to inform learners’ future language progression.

We have long recognised that integrating

artificial intelligence (AI) into our processes, products and services is part of the solution to the challenges of demand and expectation. In 2013, in response to these issues, Cambridge Assessment English started funding the

Institute for Automated Language Teaching and Assessment (ALTA), an interdisciplinary research team drawing on expertise from across the University of Cambridge.

Working with ALTA and the tech-transfer start-ups ELiT, iLexIR and EST, we created two auto-markers for writing and speaking tests – both are based on AI technologies driven by big data which is underpinned by the experience, expertise and judgement of examiners. In the development of the auto-marker for writing, tens of thousands of marked, graded and annotated scripts, amounting to millions of words, were used as training data to enable the auto-marker to identify the different patterns and features of language associated with different levels of English proficiency. This, in turn, allows the auto-marker to analyse an unmarked piece of writing and to give it a level. The same process was used when developing the speaking auto-marker, although this was a more challenging process because a learner’s accent, intelligibility and the quality of sound can affect the accuracy of the auto-marker. One application of writing auto-marking technology is the

Linguaskill Writing test, which this

animation illustrates in more detail.

An AI auto-marker is a very powerful tool but human examiners still perform an irreplaceable role in high-stakes language assessment, as an auto-marker does not (fundamentally) understand English. For example, one way it assesses ‘vocabulary use’ in speech and writing is by the similarity between the bigrams (two consecutive words) used in a candidate’s response and those used by learners at various proficiency levels. Based on AI logic, a candidate is more likely to belong to a certain proficiency level if they use similar vocabulary to learners at that level, but this ‘doppelganger’ logic has limitations when applied to individualistic, high-level language use such as humour, wordplay, analogy, or metaphor.

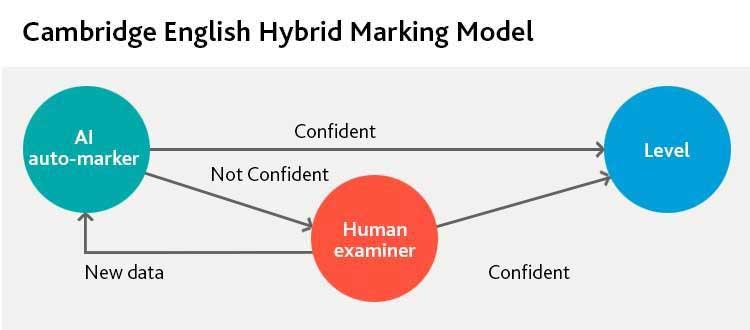

The speed and power of the auto-marker therefore needs to be combined with the human judgement of examiners, annotators, assessment experts and data analysts. This type of AI is called Human-in-the-Loop (HILT or HILT-AI), whereby humans fine tune and test the performance of the AI. This ‘hybrid’ marking model, combining the strengths and benefits of AI with those of human examiners, has many applications and can also reduce costs, save time, improve efficiency and give learners a faster turnaround for their results. It also enhances the quality of the exam, since two different judgements are combined to create a powerful assessment and learning tool.

With the application of the auto-marker we keep the Examiner-in-the-Loop and we have also use the technology to keep the Learner-in-the-Loop.

Write & Improve is a good example of how this works. Write & Improve is a free online tool which helps learners practice their writing by providing a wide range of tasks at different levels for learners to complete in or outside the classroom. Supported by AI technologies, Write & Improve not only marks the writing and gives it a level, but it also identifies errors, makes suggestions and gives supportive feedback - all in under 15 seconds.

The learner can make corrections and resubmit their writing repeatedly, and the suggested improvements will change with each submission because the feedback is scaffolded. Too much ‘red pen’ is ultimately demotivating for learners, and counter-productive; Write & Improve gives feedback on common errors after the first submission and then, as the learner edits those errors, surrounding errors are marked on the next view, where possible and appropriate. The aim is to keep the learner motivated and engaged, and to help them identify and eliminate common and repeated errors, so that their teacher can focus on those issues which require ‘human’ support, such as discourse organisation, argumentation or nuance.

This article provides two examples of how AI has changed the way Cambridge Assessment English operates and what we can offer learners. Through its tests, Cambridge Assessment English generates over half a billion marks every single year (taken from five million exams with an average of 70 questions per exam, across 700 test versions), AI is the tool for combining this mass of data, unlocking patterns and discovering the insights which will lead to new ways of learning, teaching and testing - but always with the human, the examiner and learner, in the loop.

Dr Jing Xu

Senior Research Manager, Cambridge Assessment English

Before you go... Did you find this article on Twitter, LinkedIn or Facebook? Remember to go back and share it with your friends and colleagues!