Since its launch in November 2022, ChatGPT gained significant attention in education. The mixture of excitement and fear spread like wildfire among educationalists, policy-makers, teachers, lecturers and students. As an immediate response, many universities banned ChatGPT over misinformation and plagiarism fears, and a range of AI-detecting tools were developed to easily identify AI-generated content (for example, see GPTZero, Copyleaks, and WinstonAI). At the moment, we seem to be embracing the new reality by focusing on the ways in which we can safely and effectively use AI in teaching, learning and assessment (as opposed to banning it).

As a result, many recommendations on using ChatGPT in assessment have been put forward (for example, see JCQs recommendations). However, we have not had a chance to explore different ways in which ChatGPT is currently used by students in real-world assessment contexts (particularly coursework). We believe that understanding how students use generative AI in their learning and assessment will inform the current guidelines on how to use it safely, effectively and fairly.

At the start of the year, Cambridge Assessment International Education ran a study that investigated the quality of essays written with the help of ChatGPT, and compared them to essays written without the help of ChatGPT. The study recruited three undergraduate students from UK universities who were asked to use ChatGPT to write 1500 to 2000-word essays in IGCSE Global Perspectives. They were interviewed after the completion of the essays.

The students did not know much about the topics they were writing about, so it was anticipated that they would use it primarily for content generation (where ChatGPT provides information and answers to questions quickly). Other ways in which students often use ChatGPT are for helping them structure their answers and/or for checking grammar (for assistive purposes), and for a more personalised learning experience (ChatGPT providing personalised feedback and enhancing learner engagement)(1). We acknowledge that content generation is one of ChatGPT’s weakest points(2); we know it regularly generates inaccurate content (so-called “hallucinations”) and, therefore, cannot be “trusted”. But we have a reason to believe that there is a particular learner profile which would be tempted to use it primarily for content creation. We wanted to understand this process.

We asked students about:

- How they used ChatGPT to write essays;

- How they integrated ChatGPT-generated content into their essays; and

- How well they think ChatGPT helped with their essay writing.

Three main themes emerged from our conversations with students.

- Orientation and quick information access

- Verification and referencing of the ChatGPT-generated content

- The importance of higher-order thinking skills

Orientation and quick information access

The students used ChatGPT primarily for content generation; in particular, (a) to get an overview of the topic they knew little about, and (b) to quickly gather specific information on a topic. In this sense, they felt that the purpose of ChatGPT was very similar to the purpose of any other Internet search engine – to provide information, just much quicker and more user-friendly:

“It's just […] a better Wikipedia. It's like times… infinity better Wikipedia.”

In this instance, students did not seem to recognise the difference between Wikipedia or Internet search engines and ChatGPT in the way sources were available to them. For example, identifying a source via Internet search engines or Wikipedia would be much easier, by either checking the author/website of the pages that Internet search engines provide or references which each Wikipedia page provides (it is questionable whether this is done in reality).

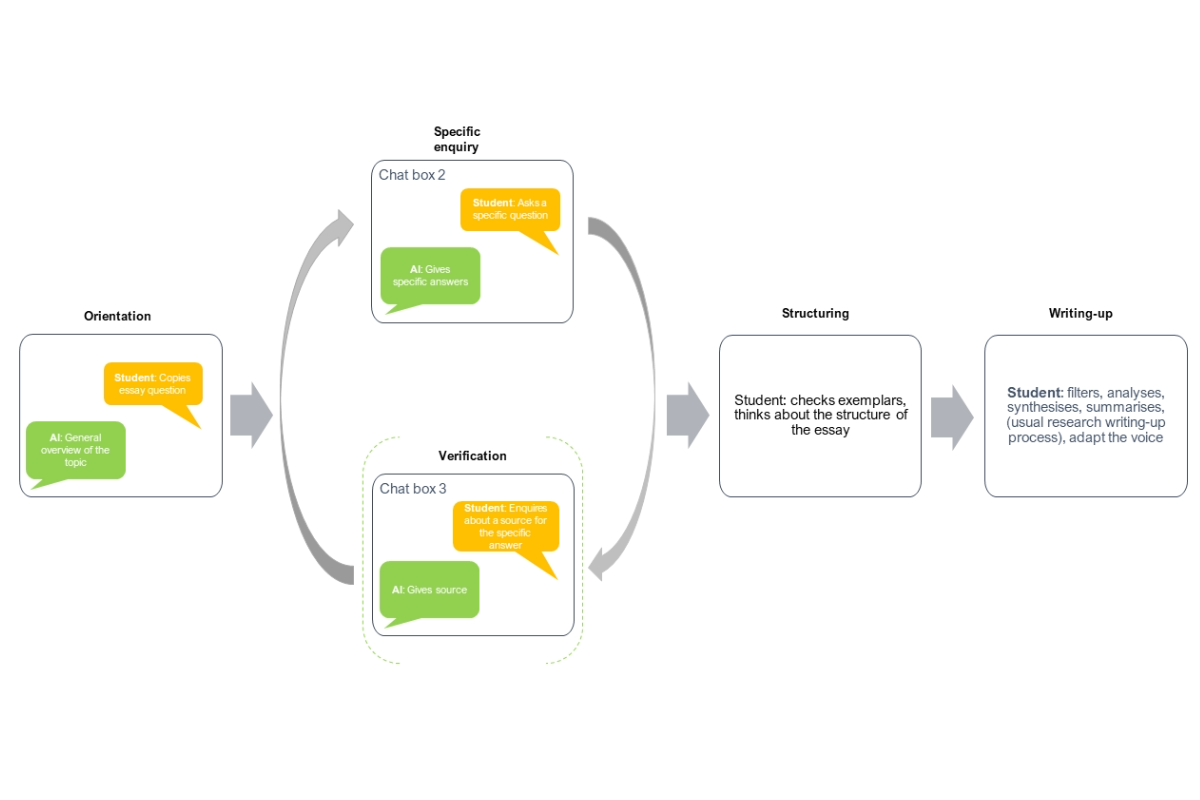

Students reported starting off by entering the essay question into the ChatGPT chat box or asking a general question about the topic (“orientation”). It helped students, who did not know much about a topic, to get a general overview of it. This was followed by multiple "specific enquiries”; students would start multiple chats to enquire about specific aspects of the topic (Figure 1):

“So, I'd start new chats […] the pattern I followed was: I'd ask it a general question like [what is the] Christian debate on abortion, for example, and it would give maybe four or five points. And then after that, I'd ask more with details, facts and statistics.”

Occasionally, students attempted to verify the ChatGPT-generated content (“verification”) by either asking ChatGPT to provide the source or by searching for the sources by using Internet engines (indicated by green brackets in Figure 1). They then gathered all the content that ChatGPT generated and started thinking about the “structuring” of their essays. Finally, the students started finalising their essays by filtering, synthesising, rewriting and evaluating ChatGPT-generated content.

Figure 1. Students’ process for essay writing with the help of ChatGPT. (PDF version)

Verification and referencing of the ChatGPT-generated content

The students had a strong sense of ethics around verification of the AI-generated content and referencing, but when asked about verification practices and referencing used in their essay writing, they reported they often skipped this part of the process. This was due to the difficulty of verifying the content, time constraints and understanding the expected standard of verifying and referencing, which was lacking:

“I feel like it's always important to verify, like double-check that it takes you to one of the big sources.”

“I [should have] probably verified it […] we didn't have time to […] verify it. I probably should do that because it's probably […] I don't know how reliable it is [...] it doesn't specifically give you sources when it gives you the information. You can't do that for every piece of information that you're gonna get, because otherwise, it's just gonna go on.”

The importance of higher-order thinking skills

Integration of ChatGPT-generated content into essays requires a high level of critical and metacognitive thinking and the ability to employ the theory of mind. In other words, students had to evaluate the AI-generated content, analyse, synthesise, filter, summarise, and adapt this content to make it sound like them:

“It's not that bad because you can then apply your research skills and select and synthesise. You will get a very low mark just [by] using ChatGPT. You can't just copy and paste. You'd need to have [...] the skills developed [...] but then I feel like those skills are developed from not using a source like ChatGPT [...] it's [...] a paradox.”

The process of verification and the decision-making behind it require accurate metacognitive knowledge about one’s ability to distinguish between credible and non-credible ChatGPT-generated content (“Do I need to verify this?”, “Am I able to assess the trustworthiness of this content?”, etc.). This can be detrimental for students with poor knowledge foundation and learning skills. In other words, students who do not have accurate metacognitive knowledge nor high levels of critical thinking might, with a false sense of confidence, use the inaccurate ChatGPT-generated information without verification.

Overall, the students found ChatGPT useful for quick access to information about topics they were not familiar with. They felt that the way ChatGPT summarised the content at a high level, giving first directions for further research was very beneficial. On the other hand, students understood that ChatGPT generated both accurate and false (and outdated) information, and the verification process was often not possible. They recognised this as a major issue.

These findings confirm the current wider claims about ChatGPT’s weakness in generating content, and have implications for students who are tempted to use it for this purpose. Students with little topic knowledge and poorer critical thinking and metacognitive skills specifically could be negatively affected by using it for this purpose. Given that students with lower academic performance often also display poor critical thinking(3,4) and poor metacognitive skills(5,6), it would be fair to assume that low-performing students could be especially negatively affected by using ChatGPT for content generation.

While the role of ChatGPT in education will probably remain a topic of contention for some time, we can continue to collect evidence about its benefits and threats, so we can make informed recommendations about its use in learning and assessment.

Learn more about our approach to reshaping AI for education.

References

- Kasneci, E., Seßler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., ... & Kasneci, G. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences, 103, 102274. Hacker, D. J., Dunlosky, J., & Graesser, A. C. (2009). Handbook of metacognition in education. New York: Routledge.

- University of Cambridge (2023, April 2023). ChatGPT (We need to talk). https://www.cam.ac.uk/stories/ChatGPT-and-education

- Fong, C. J., Kim, Y., Davis, C. W., Hoang, T., & Kim, Y. W. (2017). A meta-analysis on critical thinking and community college student achievement. Thinking Skills and Creativity, 26, 71-83.

- Behrens, P. J. (1996). The Watson-Glaser Critical Thinking Appraisal and academic performance of diploma school students. Journal of Nursing Education, 35(1), 34-36.

- Pintrich, P. R., & De Groot, V. E. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33–40.

- Young, A., & Fry, J. D. (2008). Metacognitive awareness and academic achievement in college students. Journal of the Scholarship of Teaching and Learning, 8(2), 1-10.